During my graduate studies, I worked on a research project in the field of Machine Learning as a Research Assistant and Teaching Assistant in Python.

As a research assistant, I was responsible for developing linear regression models in Python using fresh PostgreSQL data to predict the weight of individual pigs. This required a deep understanding of machine learning algorithms, data processing techniques, and data analysis. I worked closely with the research team to refine the models and validate their accuracy. We also developed methods for predictions in pandas DataFrames to make the models more accessible and usable.

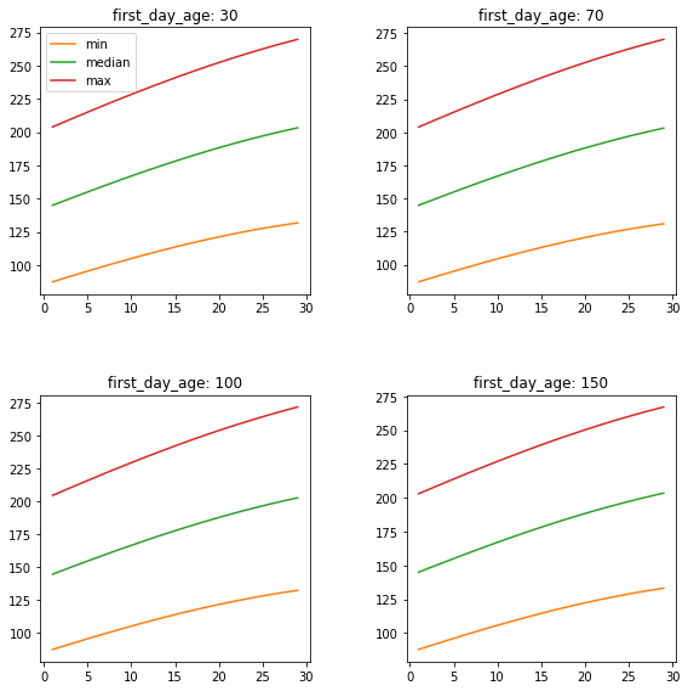

One of the most significant challenges we faced during the project was dealing with data variation and identifying the most significant factors that influenced weight predictions. To address this challenge, we developed a matplotlib graphing function to compare predictions and calculated statistics such as R2 and R values to measure the variation in data and daily prediction accuracy. This allowed us to identify trends and patterns in the data and make improvements to the models.

As a teaching assistant in Python, I was responsible for helping students understand the programming language and its applications in machine learning. This involved developing curriculum materials, leading lab sessions, and grading assignments. By working as a teaching assistant, I developed a deep understanding of Python and how to apply it in real-world machine learning applications.

Throughout the project, I developed several key skills, including data analysis, statistical modeling, and programming. I learned how to work effectively as part of a research team and how to communicate complex ideas to a diverse audience.

In conclusion, my work as a research assistant and teaching assistant in Python allowed me to make significant contributions to a challenging and exciting project in the field of Machine Learning. By developing linear regression models, creating matplotlib graphs, and working as a teaching assistant, I gained valuable experience and developed essential skills that are useful for any data science or software engineering role. I am proud of the work that I accomplished during my tenure, and I look forward to applying my skills and experience to new projects in the future.

Model Code

from asyncio import protocols

import os

os.chdir(os.path.dirname(os.path.abspath(__file__)))

from venv import create

import psycopg2

import aiosql

import numpy as np

import pandas as pd

from dotenv import load_dotenv

import statsmodels.formula.api as smf

import dill

import datetime

import types

from loguru import logger

import matplotlib

#matplotlib.use('Agg')

import matplotlib.pyplot as plt

def predict(self, values_to_predict):

"""

A function to replace the `predict` method in the statsmodels results object.

This predict function adds the random effect predictions to the model's

predictions which do _not_ include the random effects.

Args:

values_to_predict (pd.DataFrame): A dataframe containing the predictors.

Returns:

numpy.ndarray: The predictions.

"""

import numpy as np

# get the random effects from the model

re = self.random_effects

# get random effects predictions for `values_to_predict`

re_predictions = [np.dot(re[dat["rank"]], [1, dat["day_number"]]) for _, dat in values_to_predict.iterrows()]

# sum the fixed effect prediction and the random effects predictions

predicted_weight = self.predict_internal(exog=values_to_predict) + re_predictions

# put it back in a `pandas` DataFrame with the associated rank and day_number

results = values_to_predict

results['predicted_weight'] = predicted_weight

return results

def build_model(series):

"""

`build_model` builds and fits a polynomial model for pseudopigs.

Args:

series (pd.DataFrame): A `pandas` DataFrame containing the following columns for the pig group: `day_number`, `rank`, and `weight`.

Returns:

MixedLMResultsWrapper: A built `statsmodels` model.

"""

# TODO: Maybe drop the fourth degree polynomial from the model?

#model = smf.mixedlm(f"weight ~ day_number + np.power(day_number, 2) + np.power(day_number, 3) + np.power(day_number,4)", series, groups=series["rank"], re_formula="1 + day_number")

model = smf.mixedlm(f"weight ~ day_number + np.power(day_number, 2)", series, groups=series["rank"], re_formula="1 + day_number")

fit = model.fit()

return fit

def cleanup_pseudopigs(df):

"""

Given a set of data directly from the following `aiosql` call:

`queries.get_pseudopigs_for_pig_group(conn, pig_group_id=pig_group_id)`

`cleanup_pseudopigs` converts the data to a `pandas` DataFrame, fills NA's,

and pivots the data.

Args:

df (list): The `aiosql` result (a list).

Returns:

pd.DataFrame: A `pandas` DataFrame containing the pivoted data.

"""

# read data into dataframe

df = pd.DataFrame(df, columns=['id', 'uid', 'created_at', 'updated_at', 'pig_group_id', 'rank', 'weight', 'for_day', 'model_id'])

#df = df[~(pd.to_datetime(df['for_day']) < '2021-10-01')]

# reformat data so the day is on the row index, rank in the column

# index, and value is the weight

df = df.fillna(value=np.nan)

df = df.pivot(values="weight", columns="rank", index="for_day")

return df

def renumber_pseudopigs(df, first_day_age):

"""

Given the result of `cleanup_pseudopigs`, `renumber_pseudopigs`

changes teh row index to be a number representing the day in a series

of days. It relabels columns to be in a specific format, and it converts

the data from wide format to long format.

Args:

df (pd.DataFrame): The output from a call to `cleanup_pseudopigs`.

Returns:

pd.DataFrame: A `pandas` DataFrame that is ready to be used to build our polynomial model.

"""

# TODO: Update to utilize the pigs age. day_number is really the pigs age.

# change the row index to be a number representing the day in a

# series of days. For instance, the following:

# 1/1/2021

# 1/2/2021

# 1/5/2021

# 1/7/2021

# ...

# Gets converted to:

# 0

# 1

# 4

# 6

df = df.reset_index()

df['day_number'] = df.reset_index()['for_day'].diff().dt.days.fillna(0).astype(int).cumsum()

df['day_number'] += first_day_age

df = df.drop(columns=['for_day',])

df = df.set_index('day_number')

# convert from wide to long format, and remove rows where the weight column is NaN

df = pd.melt(df.reset_index(), id_vars=["day_number"])

df.rename(columns={"value":"weight"}, inplace=True)

df.dropna(subset=["weight"], inplace=True)

return df

def main():

load_dotenv()

# establish a database connection

logger.info(f"establishing database connection...")

queries = aiosql.from_path("./queries.sql", "psycopg2")

conn = psycopg2.connect(dbname="gromaster", user="piuser", password="placebo-object-phony-heroes", port=5432, host="grodb.c5s7ln3z3xjl.us-east-2.rds.amazonaws.com")

# get the active pig group's ids

pig_group_res = queries.get_active_pig_groups(conn)

pig_group_ids = [val[0] for val in pig_group_res]

logger.info(f"active pig group ids: {pig_group_ids}")

# filter out pig groups with no new data for the previous day

today = datetime.datetime.now() - datetime.timedelta(days=1)

# today = datetime.datetime.strptime("04/19/2021", "%m/%d/%Y")

tomorrow = today + datetime.timedelta(days=1)

# filter out pig groups that don't have pseudopigs for the day

filtered_pig_group_ids = []

for pig_group_id in pig_group_ids:

result = queries.get_pseudopigs_for_day(conn, pig_group_id=pig_group_id, for_day=today)

if result:

filtered_pig_group_ids.append(pig_group_id)

logger.info(f"filtered pig groups with ppigs yesterday: {filtered_pig_group_ids}")

for pig_group_id in filtered_pig_group_ids:

pseudopigs = queries.get_pseudopigs_for_pig_group(conn, pig_group_id=pig_group_id)

# skip if there are no pseudopigs for a group

if not pseudopigs:

continue

# get the pig age in days on day of first registrations

first_day_age = queries.get_pig_age_at_first_registration(conn, pig_group_id=pig_group_id)[0][0]

# what is the date of the first day where there were enough registrations to calculate pseudopigs?

first_day_of_registrations = queries.get_first_day_of_pseudopigs_for_pig_group(conn, pig_group_id=pig_group_id)[0][0]

# what is the pigs groups age today?

pig_group_age_today = first_day_age + (today.date() - first_day_of_registrations).days

# format/clean our data. resulting dataframe should look like:

# each row is a pseudopigs weight for a day

# each column is a pseudopig with rank given as the column name

# each cell is the weight for the pseudopig

pseudopigs = cleanup_pseudopigs(pseudopigs)

print(f"{pseudopigs=}")

#df = pseudopigs[~(pd.to_datetime(pseudopigs['for_day']) < '2021-09-01')]

# quickly grab the first date with pseudopigs

first_day = pseudopigs.index[0]

print(f"{first_day=}")

# finish changing the row index to a number

pseudopigs = renumber_pseudopigs(pseudopigs, first_day_age)

print(f"{pseudopigs=}")

# build a model to predict pseudopig weights for every

# pseudopig in the given pig group

model = build_model(pseudopigs)

# there are issues in the design of the statsmodels that

# causes issues pickling/unpickling. We are basically

# removing an completely unnecessary class wrapper.

model = model._results

# in order to use a consistent API, i.e. `model.predict(some_df)`

# for all consumers (website, etc.) we move the original predict

# function to predict_internal and we monkey patch the predict method

# to use our _custom_ predict method. The type.MethodType is needed in order

# for our model object to recognize the `self` argument in our custom

# predict function as the model object. This hack is needed because the

# default predict method only considers fixed effects in it's prediction.

model.predict_internal = model.predict

model.predict = types.MethodType(predict, model)

# serialize the model

payload = dill.dumps(model, protocol=5)

# insert the modified model object into the database

model_id = queries.insert_model(conn, model=payload, model_type="polynomial")

# update the pseudopigs model field to point to the model

queries.update_pseudopigs_with_model(conn, model_id=model_id, for_day=today, pig_group_id=pig_group_id)

# commit changes to database

conn.commit()

# for each remaining pseudopig, get the projected pig weights for 1 day in the future

# calculate the suggested_low_threshold and suggested_high_threshold to achieve the light/medium/heavy pen targets

# depending on the feature_opt_in value/setting set by farmer, either set high_threshold and low_threshold to the

# suggested values, or increment current high_threshold and low_threshold by 2 lbs

# calculate the most recent number of pseudopigs

remaining_pseudopigs = pseudopigs.loc[pseudopigs['day_number'] == pseudopigs['day_number'].max(), ].shape[0]

print(f"{remaining_pseudopigs=}")

# predict the weights for tomorrow for each remaining pseudopig

to_predict = pd.DataFrame(data={"rank": list(range(remaining_pseudopigs)), "day_number": [pig_group_age_today + 1]*remaining_pseudopigs})

custom_predict = pd.DataFrame(data={"rank": list(range(remaining_pseudopigs)), "day_number": [pig_group_age_today + 1]*remaining_pseudopigs})

logger.info(f"{to_predict}")

predictions = model.predict(to_predict)

logger.info(f"{predictions}")

# does this farm opt in to our suggested percentiles?

opt_in = queries.does_farm_opt_in_for_pig_group(conn, pig_group_id=pig_group_id)[0][0]

# get the desired light/medium/heavy pen percentages from the database

light, _, heavy = queries.get_percentiles_for_pig_group(conn, pig_group_id=pig_group_id)[0]

# calculate the percentiles for our next day predictions

double_pig_threshold = round(predictions['predicted_weight'].max()) + 10

logger.info(f"suggested double pig threshold: {double_pig_threshold}")

queries.set_double_pig_threshold(conn, pig_group_id=pig_group_id, double_pig_threshold=double_pig_threshold)

conn.commit()

if opt_in:

# if users opt in set the current threshold to the suggested

suggested_high_threshold = round(np.percentile(predictions['predicted_weight'], 100-heavy))

logger.info(f"suggested high threshold: {heavy} - {suggested_high_threshold}")

queries.set_current_high_threshold(conn, pig_group_id=pig_group_id, current_high_threshold=suggested_high_threshold)

conn.commit()

suggested_low_threshold = round(np.percentile(predictions['predicted_weight'], light))

logger.info(f"suggested low threshold: {light} - {suggested_low_threshold}")

conn.commit()

if light and opt_in:

print('Threeway. Sweet. Um, I dunno know what to do here yet.')

conn.close()

if __name__ == '__main__':

main()

- Developed python methods to make predictions in pandas DataFrames and make matplotlib graphing function to compare.

- Calculated statistics such as R2 & R values and measured variation in data and the daily prediction.

Project Title: Pig Weight Prediction using Machine Learning

Project Description:

As a Graduate Research and Teaching Assistant at The Data Mine-Purdue University, I had the opportunity to work with Gro Master to develop a machine learning model for predicting the weight of individual pigs using fresh PostgreSQL data. The project aimed to improve the efficiency and productivity of pig farming by providing accurate predictions of pig weight, which can be used for planning and forecasting.

My responsibilities in this project included:

- Developing linear regression models in Python using pandas DataFrames

- Validating the models and developing methods for predictions

- Creating a matplotlib graphing function to compare predictions and calculated statistics such as R2 and R values to measure variation in data and daily prediction accuracy.